What is Apache Kafka?

Apache Kafka is mainly used for Messaging Queues. Apache Kafka is a distributed Publish-Subscribe Messaging System. Kafka was developed at LinkedIn and later became part of the Apache project. Kafka is also used for real-time data streams and to collect big data for real-time analytics.

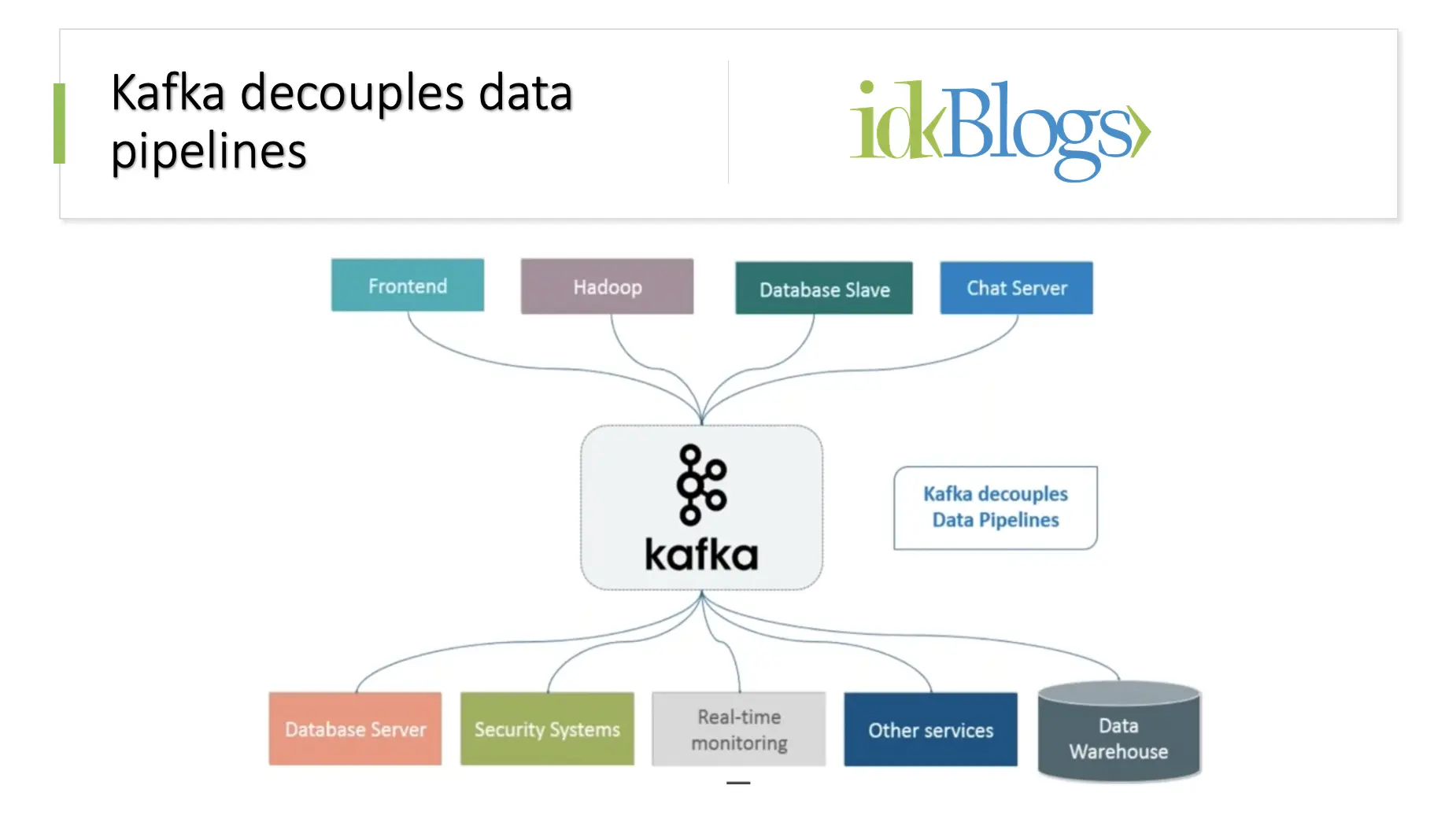

Kafka decouples the data pipeline and solves the complexity problem.

The application which are producing the messages to Kafka could be anything like front end server,

Hadoop server,

database server,

Chat server. These are called Producers.

The application which is consuming those messages from Kafka like a database server

your security system, monitoring system, other services, or data warehouse are called Consumers.

So your producer sends data to Kafka, Kafka stores those messages, and the consumer who wants those messages

can

subscribe

and receive them. You can have multiple subscribers to a single category of messages.

Adding a new consumer is very easy. You can go ahead and add a new consumer and just subscribe to the

message categories

that is required.

The Kafka cluster is distributed and has multiple machines running in parallel. This is the reason why

Kafka is fast, scalable, and fault tolerant.

Integrate Kafka Messaging Queue with NodeJS

Basic Terminology of Kafka

Topic:

Kafka Topic is a category or feed name to which records are published. Topics in Kafka are always multi

subscribers, that is

topic can have zero more or many consumers that can subscribe to the topic and consume the data. In the to

Kafka you

can have sales record getting published to sales topics, product records, getting permission to product

topics

and so

on. This will actually segregate your messages and the consumer will only subscribe to the topic which they

need.

Kafka topics are divided into a number of partitions. Partitions allow you to paralyze a topic by splitting

the data in a particular topic across multiple brokers. Each partition can be placed on a separate machine

to allow multiple consumers to read from a topic parallel.

Partition:

Topics are broken up into ordered commit logs called partitions.

Consumer:

The consumer can subscribe to one topic and consumes data from that topic. You can have multiple consumers

in a

consumer

group. So a consumer labels themselves with a consumer group name.

Each record published to a topic is delivered to one consumer instance within that subscribing consumer

group. But you

can have multiple consumer groups which can subscribe to a topic where one record can be consumed by a

multiple

consumer, that is one consumer from each consumer group. Consumer instances can be separate processes or

can be

separate machines.

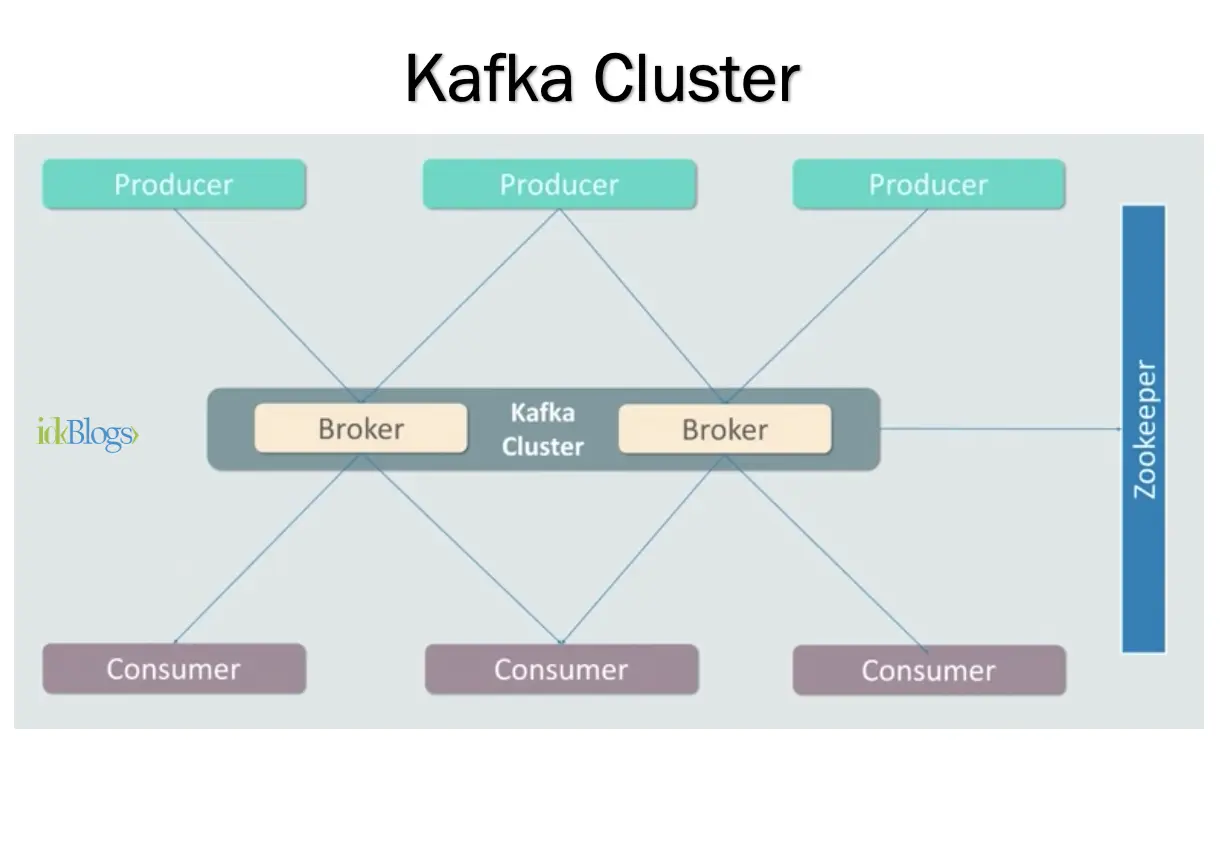

Brokers:

A Kafka cluster is a set of servers, each server is called a cluster or we can say Brokers are a single machine in a Kafka cluster.

Zookeeper:

Zookeeper is another Apache open-source project. It stores the metadata information related to Kafka cluster, like broker information, topic details, etc.

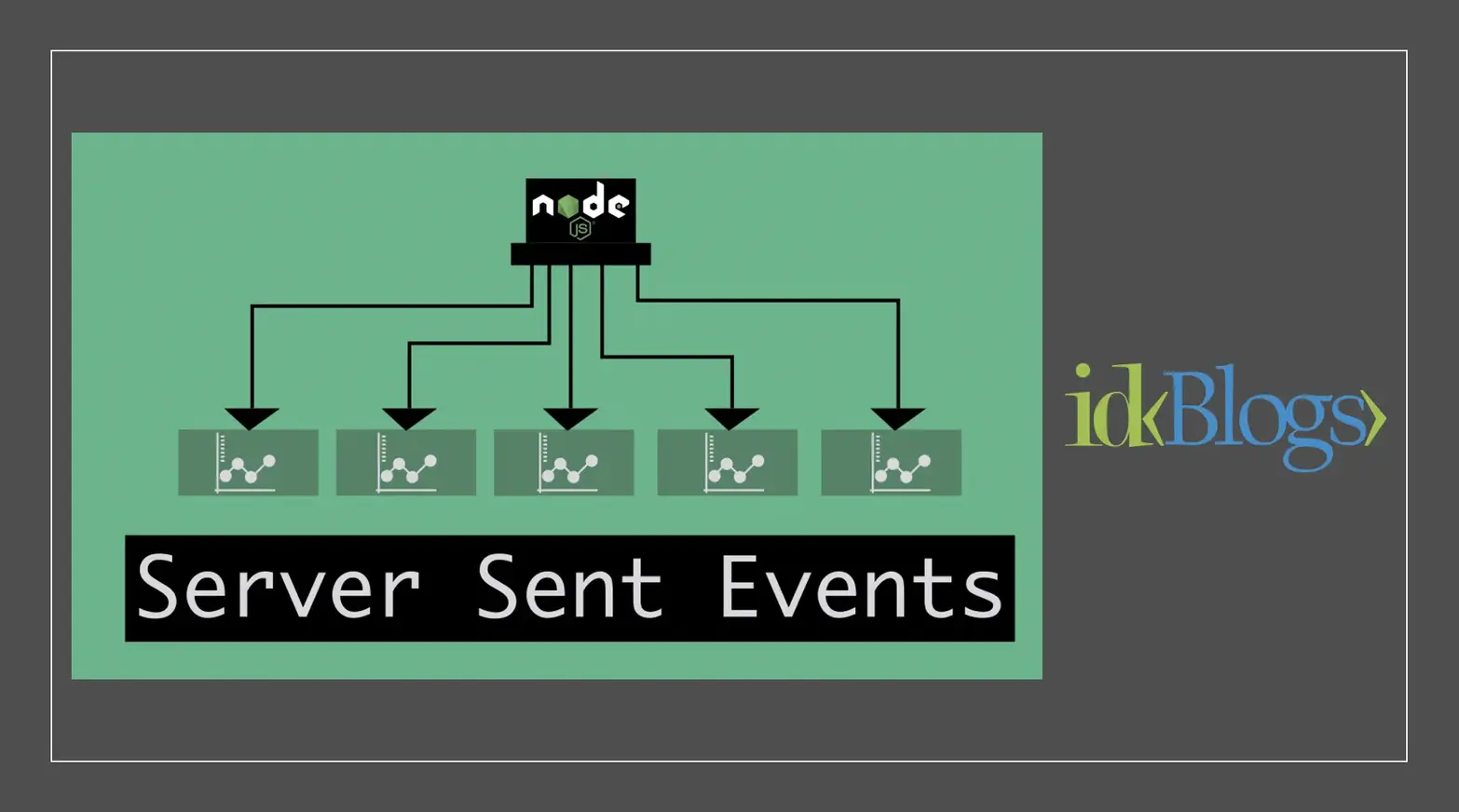

Let's understand the Kafka Cluster?

Integrate Kafka Messaging Queue with NodeJS

In the above image, we can see that we have multiple producers which are producing data to Kafka broker and the Kafka broker is inside a Kafka cluster. Here, we have multiple consumers, consuming the data from the Kafka broker, or we can say Kafka cluster. This Kafka cluster is managed by Zookeeper. Zookeeper is basically maintaining the metadata of your Kafka cluster.

What are the Kafka features?

👉 High Throughput

👉 scalability

👉 Prevent Data Loss

👉 Stream Processing

👉 Durability

👉 Replication

👉 Easy to integrate

Let's integrate the Kafka with NodeJS

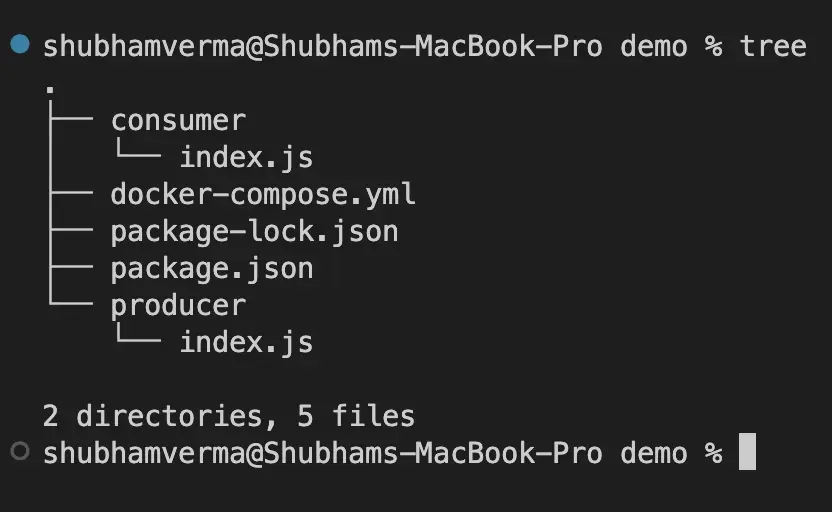

Now we have basic theoretical knowledge of Kafka. Let's We'll integrate Kafka with NodeJS. For that we need to install the docker and nodejs on our machine, after the installation, we need to follow the below steps.Step 1: Prepare a demo app directory

The root directory is "demo". To start the demo Kafka app, let's create the directory structure as below.

Integrate Kafka Messaging Queue with NodeJS

Step 2: Prepare a docker container

In this demo, we will use a docker container to run our Kafka, So we need to create a docker-compose.yml file to run the Kafka on your local machine. Your Kafka will be run into a docker container.Add the below codes to your docker-compose.yml file.

docker-compose.yml

In the above docker-compose file, we have 2 services, zookeeper, and Kafka. the Kafka is depends on the zookeeper and run both apps in the container on the given ports.

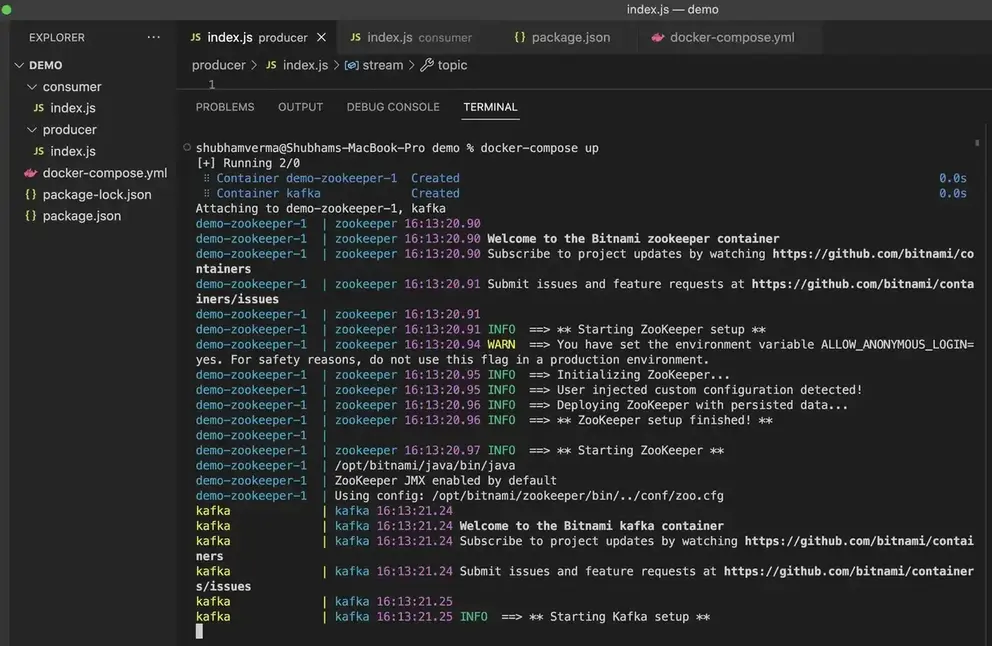

Step 3: Run the docker container

Now we need to run the docker container using the below command. the below command will start the container and pull the image for zookeeper and kafka. Before running the below command, don't forget to start the docker on your machine.This is what your command result will look like.

Integrate Kafka Messaging Queue with NodeJS

Note: Keep this tab running, and we will have 3 terminals running at the same time, at last, 1 for the docker container, 1 for the producer and 1 for consumers.

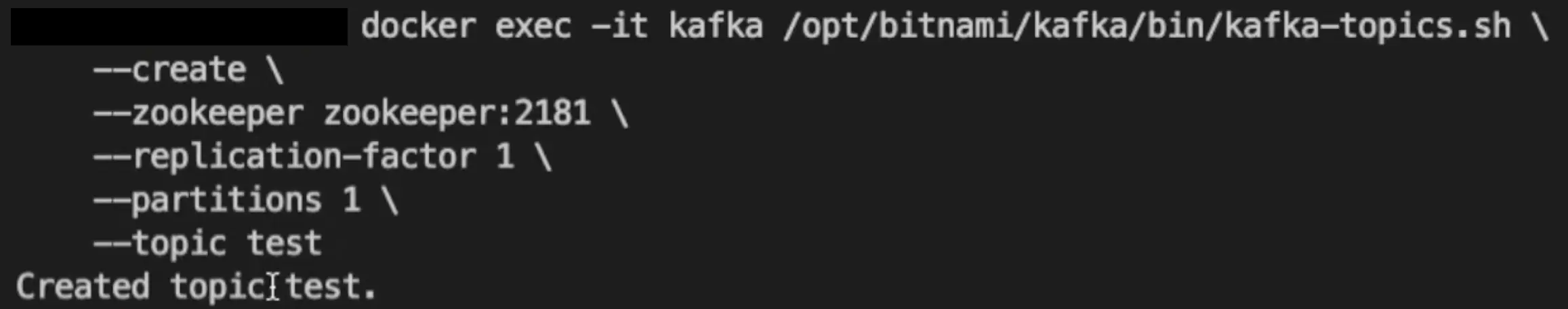

Step 4: Create a topic in Kafka

Before implementing Kafka, we need to decide on the Kafka topic (explained at the top). Kafka Topic is a category or feed name to which records are published. In this topic, we will publish our messages, basically, this is the message queue.We will create a topic name "test". To create a topic run the below command.

If your topic is successfully created, then you can see a message "Topic created test" as below.

Integrate Kafka Messaging Queue with NodeJS

Step 5: Create package.json file

Now it's time to integrate the Kafka with nodejs, for this we need to create a package.json with the below codes.In the above codes, we have 2 scripts, "start:producer" and "start:consumer". We will use these scripts later when we will finish our producer and consumer.

Also, we have used a npm package called "node-rdkafka". Using this npm package we will publish the message into the Kafka and will consume the message through the consumer.

Step 6: Install the dependencies

After, we need to install the dependencies that we have added in the package.json file. So let's run below command.Step 7: Create a Producer

Now, it's time to create a producer. The Producer will publish the message into Kafka every 4 seconds.Let's write the below codes into producer/index.js

producer/index.js

Let's understand the above codes.

In the above codes, we have imported the 'node-rdkafka' and we are creating a stream using 'Kafka.Producer.createWriteStream'. We have provided the required options and the topic name 'test' that we will use to publish the message in that message queue.

We have handled any kind of exception or error using KafkaStream.on('error').

Now we have publishMessage() method which is generating a random number and this random number will be pushing into the queue. The publishMessage() is getting called every 4 seconds, so every 4 seconds, the producer will publish a random number into our topic.

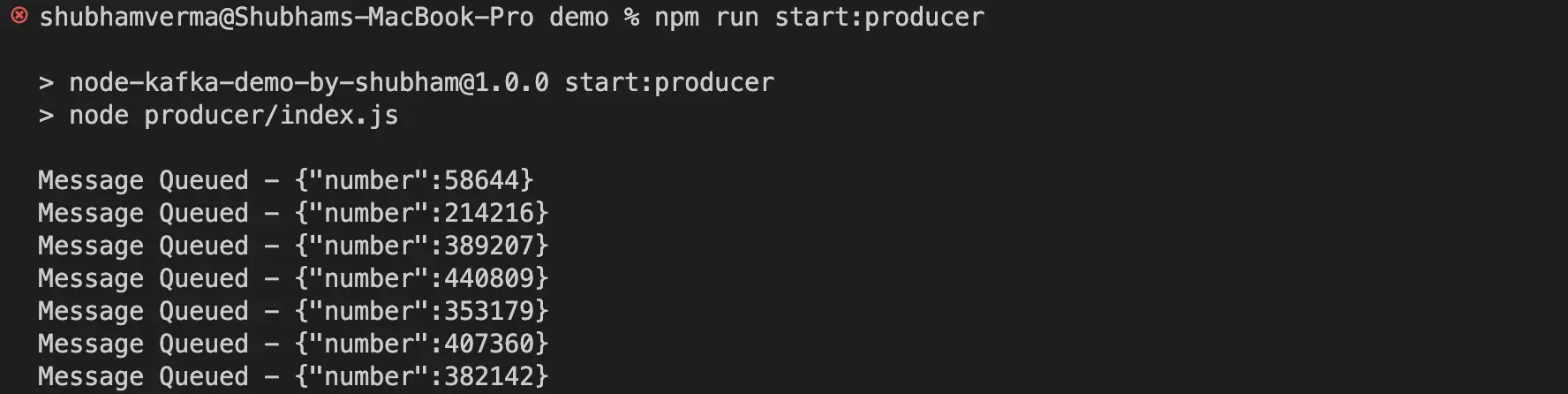

Step 8: Run the producer

Now it's time to run the producer using the below command.After running the above command, you can see your producer will start and it starts publishing the messages into the queue. see the below result.

Integrate Kafka Messaging Queue with NodeJS

Note: Keep this terminal running along with the docker terminal.

Step 9: Create Consumer

Now it's time to create the consumer to consume the already queued messages by the producer. When the consumer will be started, this will read all the previous messages stored in the topic, and also will be consuming the new messages when the producer publishes the topic.Let's write the below codes to consume the message in the file "consumer/index.js"

consumer/index.js

In the above codes, we are consuming the messages once the consumer is ready to consume the data.

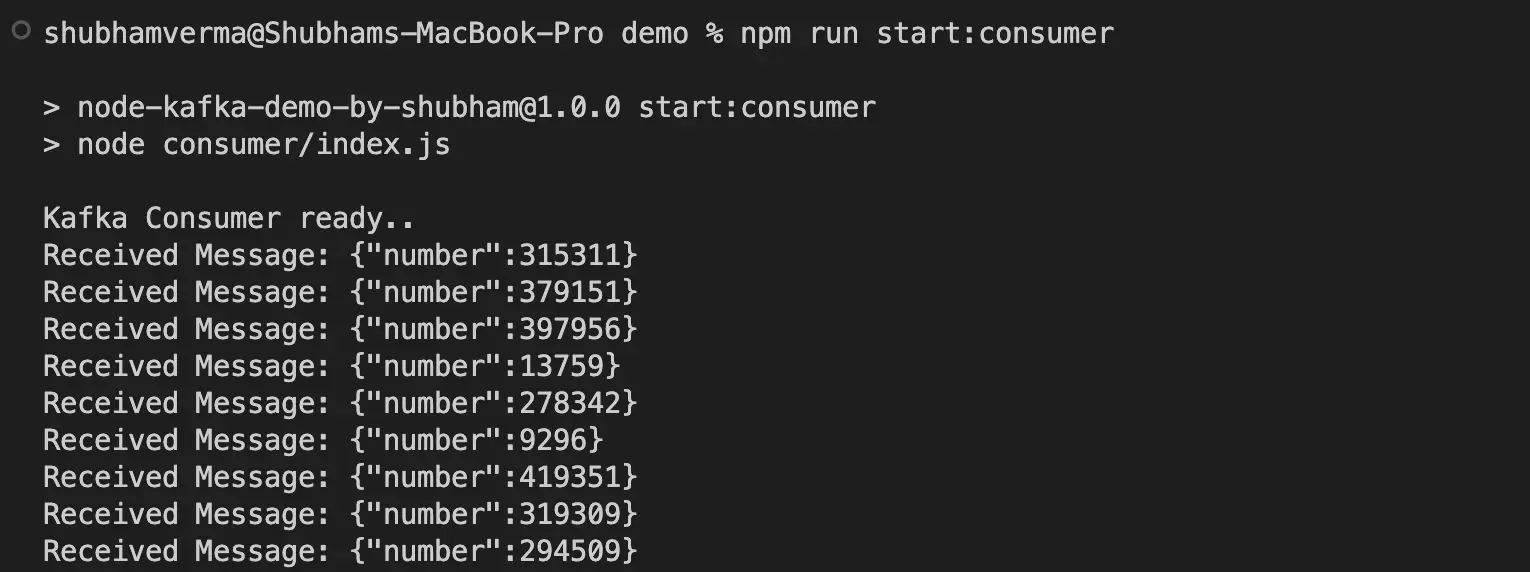

Step 10: Run the Consumer

Now it's time to run the consumer, to do so, we need to run the below command.After running the above command you will see our consumer is consuming the data which is already stored by our producer and then will start consuming once the message is published into the topic, see the below output.

Integrate Kafka Messaging Queue with NodeJS

See the full result here:

Integrate Kafka Messaging Queue with NodeJS

Conclusion:

In this article, we learned about Kafka like, What is Apache Kafka?, Basic Terminology of Kafka, Kafka Topic, Partition, Consumer, Brokers, Zookeeper, Kafka Cluster, Kafka Features and we have integrated Kafka with NodeJS.Related Keywords:

What is Apache Kafka?

Basic Terminology of Kafka

What are the Kafka Features?

Integrate the Kafka with NodeJS

Getting started with NodeJS and Kafka

Implementing a Kafka Producer and Consumer In Node.js

Integrate your Node.js app with Apache Kafka

Apache Kafka client for Node.js

Strongly Recommended Books For You:

Reading books opens the door to allow in more lights, If we want to know a man of rare intellect, try to know what books he/she reads.For India:

For Other Countries (United States, United Kingdom, Spain, Italy, Germany, France, Canada, etc)

Thank you

I appreciate you taking the time to read this article. The more that you read, the more things you will know. The more that you learn, the more places you'll go.

If you’re interested in Node.js or JavaScript this link will help you a lot.

If you found this article is helpful, then please share this article's link to your friends to whom this is required, you can share this to your technical social media groups also.

You can follow us on our social media page for more updates and latest article updates.

To read more about the technologies, Please

subscribe us, You'll get the monthly newsletter having all the published

article of the last month.